Delivering premium ad inventory like a pro: The 2021 guide

By Asaf Shamly | March 30, 2021

Today’s publishers live in a world in which they’re expected to deliver an ad inventory of the highest standards. Like it or not, that’s not going to change.

What does a premium ad inventory mean? An ad inventory that can meet the “holy triangle” of expectations – high viewability, brand-safe and fraud-free for advertisers, well paid and at the maximum amount for publishers, and served in a positive user experience for site visitors.

Ad inventory creation and optimization is a team effort, no doubt about it. Product teams, revenue teams, AdOps, and Research and Development (R&D) teams all need to invest some serious effort in order to strike the right balance and make all stars align.

In this post, I’ll visit the most common publisher inventory management challenges that these task forces face along the way. I’ll provide some pro tips on how to move the needle and meet the holy triangle. Here’s a quick rundown on the obstacles I’ll cover further below:

- Increasing viewability without losing impression volume or upsetting users

- Rendering each ad the right way

- Better selling into viewable inventory

- In-view ad refresh as part of optimization strategy

- Complying with ad density rules

- Header bidding complexities

- Overcoming sophisticated bid shading algorithms.

TLDR;

The ad industry started heading towards providing publishers with the tools they need to handle sophisticated buy-side processes. We’re at the brink of saying goodbye to tedious manual labor and welcoming evolved processes that are more transparent, automated, and granular.

Let’s get started.

Increasing viewability without upsetting users

Five years ago, viewability became something of a currency. For an ad to be considered viewed, half of it needs to be in the visitor’s view for at least a second. The magic standard in the industry is 70%, meaning seven out of 10 ads need to be viewable.

Hitting that magic number without hurting the UX or upsetting users can be quite challenging, as some of the tactics publishers go for mean they need to compensate on either revenue, quality, or quantity. However, with a combination of several tactics, as well as some out-of-the-box thinking, some publishers are managing to successfully crack the code of boosting their viewability without antagonizing their audience.

We’ve conducted a survey among the leading publishers in order to find out what (if at all) helped them move the needle during 2020. Here’s what kept coming up:

- A/B templated ad layouts

- Lazy loading ads

- Compromising on sticky placements

- Redesigning the site

- Improving site speed

- AdOps footwork

- Removing demand

With these practices, publishers need to take the good with the bad. For example, A/B templated ad layouts are a great way to improve viewability, and so is site redesign, but they’re a lot of work, and results are NOT guaranteed. Lazy loading ads can do wonders for viewability, but often at the expense of impressions, and sticky placements, naturally only on desktop, can infuriate quite a few visitors if not properly created, driving them to install ad blockers.

AdOps footwork, perhaps the most popular parallel optimization activity among these practices, revolves around chasing ad positions that perform best (high standard-wise) at a given point in time and then shifting campaigns to those positions for maximum impact. Sure, it works, but it requires quite a lot of manual labor, leaves many high-standard impressions on the floor, and heavily relies on historical viewability data that may, or may not, be entirely accurate or even relevant.

Static ad layouts (inventory) that deliver a similar experience to all visitors result in low viewability rates, low CPMs, and low inventory yield.

Forward-thinking publishers have started deploying AI at the ad-call level, allowing them to make split-second decisions in the moments between a visitor entering and viewing the page.

This entails relying on hundreds of anonymized data points to personalize the ad layout for each individual visitor in real time. In short – how a specific website looks for me at a certain point in time doesn’t necessarily mean it will look the same for you, at any other point in time.

But what the publisher will serve to both of us is a highly viewable impression, that’s for sure. As a consequence, viewability rises while user experience stays intact (or even improves).

Rendering each ad the right way

Many publishers combine eager (fix) and lazy loading in order to decrease page loading times and to improve viewability (by serving fewer non-viewable ads based on broad common behaviors). Usually to keep viewability high (and with a heavy cost on UX) publishers load all ad placements that are above the fold when the page loads, leaving the below-the-fold ad units to be lazy loaded (a gradual rendering of ads per user location on the page). Usually, the improvement in viewability rates is instant but often means that 30%-50% of ad requests on a given page weren’t initiated due to user behavior.

Furthermore, if not properly optimized, eager/lazy loading can actually damage one’s viewability rates. For example – if a publisher enables lazy loading higher up on a page, but the users end up quickly scrolling past that placement, publishers may end up with an even lower viewability rate.

Finally, publishers may end up miscalculating when and where to start lazy or eager loading the ads. As a result, users may see the ad loading in front of them, resulting in some embarrassingly bad user experience.

That’s why it’s paramount that each ad gets rendered the right way.

Instead of having a fixed location that has the potential to hurt both ad request scale and viewability, publishers should opt for a per impression and per placement approach. For example, they could use real-time data on parameters like devices used, internet speed, page attributes, as well as user behavior, to decide exactly when and where to load an ad.

This can be achieved with AI, providing publishers with dynamic, real-time data on the specific ad inventory placements they should load (and on the ones they should avoid loading!).

Better selling into viewable inventory

One of the more popular strategies publishers deploy (due to the quick results it’s capable of producing) is manually shifting direct campaigns from one (lesser-performing) position to another (better-performing) position.

This typically occurs in instances when they need to guarantee viewability for direct campaigns and/or PMPs of at least 70% (the industry standard) and avoid impression waste while at it. They rely on general, high-level placement data and send 70% predicted viewable ad requests to a specific line item that they know will typically deliver, and serve all other ad requests to open auction.

But their knowledge of the viewability rates for each ad unit is based on historical data, and that can never be bulletproof. So, they always keep an eye on the entire inventory, and in case the viewability rates suddenly improve on other ad units, or worsen on the ad unit they were counting on, they serve the ad requests in accordance, trying to avoid wasting impressions.

That’s how publishers are usually able to better sell into viewable inventory. The strategy works, and works fairly well, but requires a ton of footwork due to the constant shift in viewability rates across the ad inventory, and more importantly, it leaves many viewable impressions on the floor as the overall viewability of a given placement can be below the desired 70%. Also, to make things worse, without real-time data, it’s impossible to predict these shifts in viewability rates and when you’ll need to move your campaign again.

Maximizing in-view ad refresh

Another super popular strategy is utilizing in-view ad refresh, which is pretty self-explanatory. The publisher would make sure that an ad placement that has already rendered an ad and is still in the users’ viewport for a predetermined time, will render a new ad instead.

Usually, to handle ad refreshing, publishers would use either Google’s Ad Manager, which tracks visitor activity and engagement, and reloads ads that are 51%+ in view and engaged for at least 30 seconds.

Many publishers go for the ad refresh technique because it sounds like a solution straight from heaven. It is capable of boosting ad revenue per session, producing higher viewability rates, all the while being super easy to implement and manage.

However, implementing in-view refresh needs to be done carefully, otherwise it could end up being counter-productive with impressions refreshed out of view, resulting in low viewability and CPMs.

In addition, publishers must make sure refreshed ads do not shift the page layout, causing high cumulative layout shift (CLS) changes. If that happens, what you’re looking at is extremely poor user experience.

Furthermore, some advertisers (SSPs/Exchanges) have different refreshing standards, and if you’re not paying attention, you might actually end up losing money.

Be careful not to refresh ad inventories sold through direct campaigns or when the user is inactive on the site. Also, try not to refresh AdSense inventory.

In order to make the most of in-view ad refresh and not shoot yourself in the foot, make sure you track RPS (Revenue per Session), engagement rates, and fill rates both before and after refreshing your ad inventory. Keep an eye on your viewability rates while your inventory refreshes and make sure to test different types of triggers.

Pro tip: rockstar publishers will leverage AI to track the viewability of all ad impressions on a page, allowing them to track the in-view time of each impression and the real-time in-view percentage of those impressions.

Complying with ad density rules

It’s been more than three years now since the Coalition for Better Ads introduced the Better Ads Experience Program, with which it determined that more than 30% ad density may result in a negative user experience. Anything that’s not part of the intended native content – be it outstream ad videos, sticky ads, or pretty much anything else, must not take up more than 30% of page real estate, compared to whatever content visitors came to consume (text, video, images, etc.).

The easiest way for a publisher to ensure they adhere to the ad density rules is to calculate the pixel surface for each individual component, be it content or ads.

((No of inline ads*(Height inline ad px) + (Height sticky ad unit px)) *100 = % Page ad density Total Pixels

For example, if a mobile page has content well of 2000 pixels and has three inline ads 300×200, plus a sticky 300×50, you’d calculate ad density like this:

((3×200=600)+ 50 = 650)/2000 =0.325

0.325*100=32.5

Your total page ad density is 32.5%

With everything above 30% of the mobile ad inventory being considered degrading for the UX in this rhetorical situation, you should be toning it down ever so slightly.

Well, what if you disagree with the Coalition for Better Ads and don’t care if your ad density exceeds 30%? In that case, you’re looking at a potentially significant loss of revenue. If Google flags your website as “failing” in terms of adhering to the ad density rule, you’ll get a notification, and a 30-day deadline to fix the issue. Should you ignore the warning, the non-compliant ads will start getting filtered by Google itself.

Furthermore, since mid-2020, should your website be labeled as “failing” four times in the previous 12 months, Google will start filtering ads without warning. You’ll also be unable to submit a new review for as long as a month, missing out on a significant portion of your revenue.

Calculating ad density and making sure your website adheres to the rules becomes infinitely trickier when you add multiple variables to the equation, such as various fill rates, or lazy loading. That’s why I’d advise you to tread ever so lightly – it’s better to leave a little extra breathing room than risk being flagged by the Big G. Many publishers that use injection loading along with fluid sizes (multiple potential sizes per ad placement) are very often in violation when the logic is simple and/or the impression rendered is suddenly big (imagine a short article with a couple of inline 300*600 ads and what it can do to not only UX, but also your density compliance).

One way to overcome this is to simply leverage AI to detect, in real-time, all the components of a page and create an ad inventory layout that maximizes supply without violating any guidelines.

Header bidding complexities

Header bidding, as opposed to the traditional waterfall mechanism (selling in a linear order to demand sources, once at a time), owes its overwhelming popularity among publishers to the fact that it allows them to democratize their demand stack and let all parties evenly compete on its ad inventory at once, maximizing their revenue potential.

For all it’s worth, header bidding is not without its complexities. It can often feel cumbersome and counter-intuitive, as publishers start developing numerous line items for their ad inventory. Compared to the waterfall mechanism, header bidding can result in a hundred times more work.

Furthermore, it can wreak havoc on the page load speed as loading is halted while the bidding process happens in the head. And slow-loading pages risk decreasing ad viewability and CPMs, as visitors start leaving the page before it fully loads. At the end of the day, deploying header bidding wrong could result in diminished revenues.

So, what can be done?

A popular method of reducing latency is to use the server-side header bidding model. In this model, the browser only sends out a single request, and that one is directed towards the external header bidding server. It’s the external server that now handles requests towards multiple demand partners, making site performance a non-issue.

The downside to this method is the apparent loss of cookie visibility to the buyers, which may lead to less targeting data behind each impression, and lower CPMs as a result. While there are different methods to tackle the issue, there is also the expiration date on third-party cookies set for 2022 which may put into question the entire future of client-side header bidding.

Another popular method publishers use to try and minimize header bidding complexities is optimizing timeouts. In other words, they’re limiting the time ad networks have to return a bid before being timed out. By lowering the timeout, they hope to eliminate slower partners from the equation altogether. While it may speed up the loading, it could make a mess out of total revenue as some higher-paying bids might be timed out. This is why I’d advise you to be super careful when tweaking the numbers here.

For example:

Let’s say you’re auctioning your ad inventory to 10 bidders. Each bidder needs to place a bid, but if that takes, say, 20 seconds, the page would never load. Consequently, you need to limit the time in which they can bid. That can be one second, two seconds – it differs entirely from one publisher to the next.

But if you put a really low time on it, let’s say – 200 milliseconds, you will probably have a minimum bid rate because most bidders won’t be able to return the bid within such a short timeframe. If you put 10 seconds, you’ll get all the bids (that’s a lot of time, honestly), but you’re increasing your page load time.

In conclusion, fast load time means less revenue, while slow load time means more revenue. In between is the latency that you need to care about.

So, what’s the sweet spot here?

That’s a mountain of a question and depends heavily on a sea of variables such as bidders, devices used to access the site, traffic sources, user interface, and the geographies your visitors are coming from. Some will say 1,000ms for local bidders and 2,000ms for international ones, while others will go for 1,200ms for everyone and forget about it. It would be best to test and tweak the numbers until you get it just right.

Finally, in order to overcome the above, publishers will often resort to wrapping the header (or using a container, depending on which term you prefer). The wrapper sets the rules for programmatic ad inventory buying and organizes advertisers, thus minimizing the complexity of the code that comes with each new bidding partner. One of the biggest benefits of the header bidding wrapper is the ability to create universal timeouts for all demand partners, which makes them return the bid in a pre-set timeframe. That way, publishers can eliminate slow bidders who would otherwise bog the entire page down, as well as avoid best practices such as limiting the number of demand partners to under five.

Overcoming sophisticated bid shading algorithms

Bid shading algorithms, which took the role of a compromising solution after Google’s Ad Exchange ditched second-price auctions for first-price auctions, did a lot of good for advertisers, but very little for publishers.

Advertisers, armed with advanced ad inventory management tools and solutions, are able to push the price of an ad inventory down. They can analyze information on bid history, such as what bid rates typically win on certain websites or specific ad positions. They are also able to predict, relatively easily, at what price bids are lost and, through that, predict first and second bids.

As a result, they are able to calculate what an optimal bid should be.

Publishers, on the other hand, had very little to respond with. It’s basically a game of tug of war, with advertisers armed with exoskeletons, and publishers, well, having one arm each. All they could do to fight back was to set a minimal floor reserve price. To make things even harder – they are only able to set one reserve price for all partners, and only based on historical data.

The good news? Advanced, AI-powered solutions are moving in the direction of allowing publishers to set varying floor prices, in real-time. This will even the playing field and help the publishers bring in higher revenues.

Closing comments

The age of print publications is behind us. In order to achieve the high standards that come with a premium ad inventory, publishers need new weapons and toys in their arsenal. Modern publishers have plenty of ad real estate, and these assets no longer need to be static.

Delivering a premium ad inventory is no longer a luxury, it’s an absolute necessity. But such a high level of ad inventory optimization is a difficult challenge to achieve. It demands publishers to check off all four boxes: high viewability, maximum scale, maximum price, and intact user experience. Miss one and they’ve lost the game.

Advanced tech, such as artificial intelligence, ad inventory forecasting, and machine learning, now enables real-time decisions around all ad inventory management aspects. Many publishers are still stuck in traditional, old-fashioned manual ways of working and are yet to fully tap into these new, revolutionary solutions.

We are thrilled to be at the forefront of this paradigm shift, witnessing as publishers, one after the other, realize the full potential of unleashing AI behind their efforts, making smart and personalized decisions around ad locations, ad loading, inventory structure, bid optimizing, predicting user engagement, pricing and more. The best in inventory performance optimization is yet to come.

Latest Articles

-

Do NOT make me choose between a great UX and boosting my revenue!!

The ongoing clashes between revenue, product, and editorial teams are painful to say the least. It doesn’t have to be this way.

View Now -

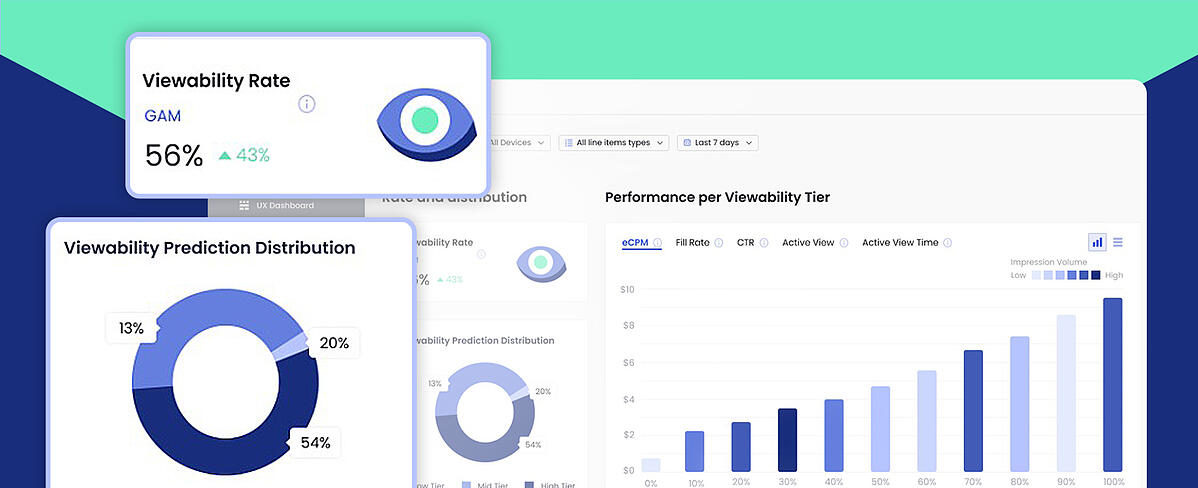

Your Brand New Viewability Dashboard!

Understand your inventory breakdown with the new Viewability Dashboard.

View Now -

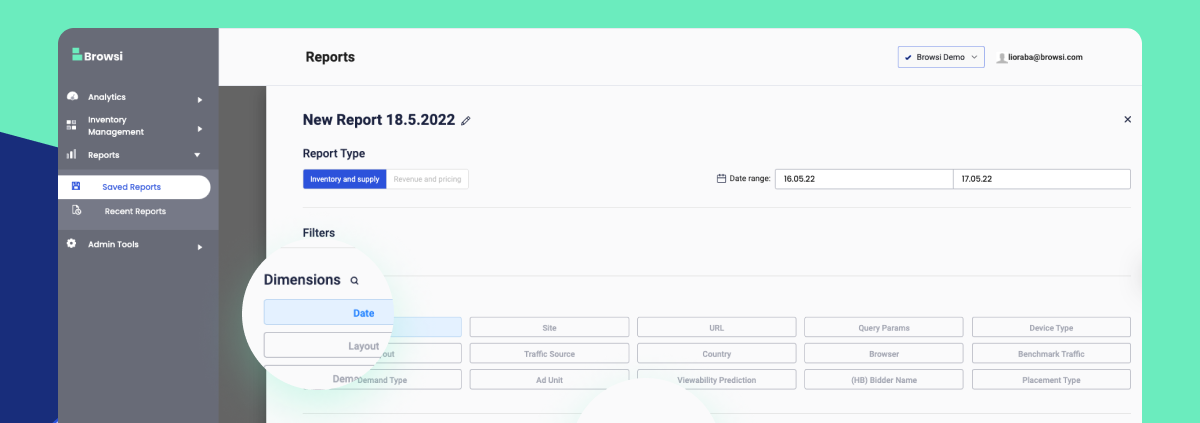

Maximize Your UX & Revenue Data With Personalized Reports

Ready to uncover insights and step up your ad layout strategy?

View Now